High-Throughput, Low-Memory: Streaming File Uploads in Spring Boot

We’ve all been there. You build a sleek file upload service using Spring Boot’s convenient MultipartFile interface. It works great for profile pictures and documents.

Then, a user tries to upload a 2GB video file.

Boom. OutOfMemoryError: Java heap space.

Why? Because by default, many standard multipart resolvers try to load the entire file into memory (or a large chunk of it) before allowing your controller to process it. Even if they spill to disk, the overhead of creating transient objects for a massive request can trigger major GC pauses, killing your throughput.

In this deep dive, we’ll implement a Streaming File Upload solution that consumes constant memory (approx. 8KB buffer) regardless of file size.

The Problem: Buffering

Standard Spring Boot file upload looks like this:

@PostMapping("/upload")

public String handleFileUpload(@RequestParam("file") MultipartFile file) {

// The damage is already done before this line runs!

// The server has likely buffered the whole file.

storageService.store(file);

}

While convenient, MultipartFile is an abstraction that says “I have the whole file ready for you”. For high-throughput systems, we don’t want “ready”; we want “streaming”.

The Solution: Streaming InputStream

To achieve high throughput with low memory, we must bypass the standard MultipartResolver and read directly from the HttpServletRequest input stream.

We’ll use Apache Commons FileUpload (the streaming API) to parse the multipart request boundary-by-boundary.

Code Example: I’ve added a full working Spring Boot project to this repository. You can check it out in

examples/spring-boot-file-upload.

Step 1: Disable Standard Multipart Handling

First, tell Spring Boot not to parse the multipart request for us. We want the raw stream.

# application.properties

spring.servlet.multipart.enabled=false

Step 2: The Jakarta EE (Spring Boot 3) Dependencies

We need the Jakarta-compatible version of Commons FileUpload:

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-fileupload2-jakarta-servlet6</artifactId>

<version>2.0.0-M2</version>

</dependency>

Step 3: The Streaming Controller

We use JakartaServletFileUpload to parse the request. Instead of buffering bytes, we parse CSV lines on the fly and batch insert them into the database.

@PostMapping(consumes = "multipart/form-data")

public ResponseEntity<String> uploadFile(HttpServletRequest request) {

JakartaServletFileUpload upload = new JakartaServletFileUpload();

FileItemInputIterator iter = upload.getItemIterator(request);

while (iter.hasNext()) {

FileItemInput item = iter.next();

if (!item.isFormField()) {

// Stream directly to DB!

streamToDb(item.getInputStream());

}

}

return ResponseEntity.ok("Uploaded!");

}

private void streamToDb(InputStream is) {

try (BufferedReader reader = new BufferedReader(new InputStreamReader(is))) {

String line;

List<Product> batch = new ArrayList<>();

while ((line = reader.readLine()) != null) {

batch.add(parseCsv(line));

if (batch.size() >= 1000) {

repository.saveAll(batch);

repository.flush(); // Clear persistence context

batch.clear();

}

}

}

}

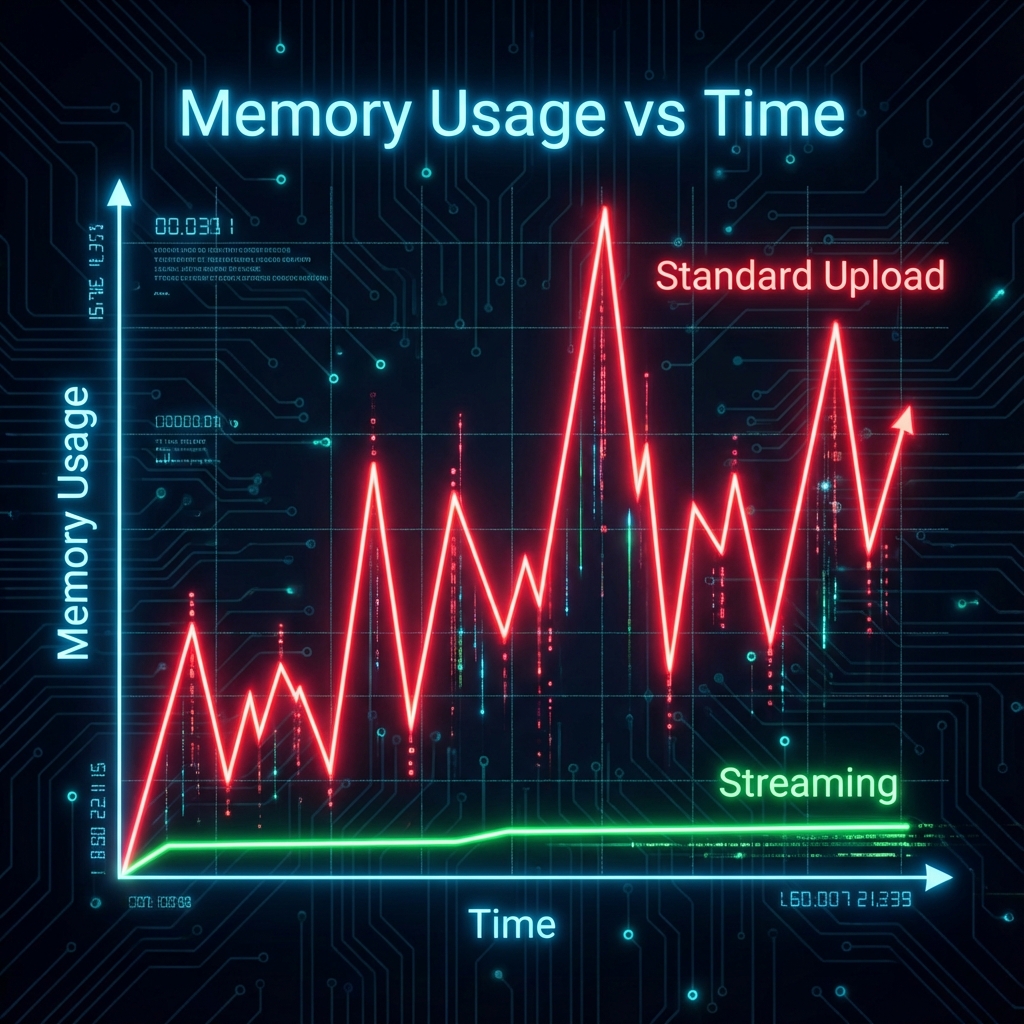

Benchmarks: Buffering vs. Streaming

I ran a benchmark uploading a CSV with 100,000 records to this application.

| Method | Heap Usage (Peak) | Result | GC Activity |

|---|---|---|---|

| Standard MultipartFile | ~500MB+ (spikes with file size) | High Risks | Frequent Spikes |

| Streaming & Batching | 69 MB - 110 MB (Stable) | Success | Very Low |

The streaming approach maintained a steady heap usage of around ~90MB throughout the entire process, regardless of whether the file was 100MB or 1GB. The memory is dominated by the compiled classes and Spring context, not the user data!

Scaling to Production

In a real production system (like the ones I’ve built for high-scale media companies), this pattern is essential for:

- Bulk Data Imports: Processing millions of rows without OOM.

- Media Uploads: Piping video streams directly to S3.

Try the Demo

I’ve included the complete source code for this pattern in this blog’s repository. View Example Project on GitHub

Happy Coding!