Virtual Threads: The End of Asynchronous Java?

The Asynchronous Complexity Tax

For over a decade, Java developers facing high-concurrency requirements had a difficult choice. On one hand, the traditional Thread-per-Request model was simple and easy to debug, but it consumed roughly 1MB of memory per thread, causing applications to crash or lag once they hit a few thousand concurrent users.

On the other hand, Reactive Programming (WebFlux, Project Reactor) and CompletableFuture offered massive scalability but at a high cost: “Callback Hell,” stack traces that are impossible to read, and a steep learning curve.

Project Loom (Virtual Threads), released in Java 21, promises the best of both worlds: the simplicity of blocking code with the scalability of asynchronous I/O.

What are Virtual Threads?

In the traditional model, a Java thread is a thin wrapper around an Operating System (OS) thread. These are expensive. If you have 5,000 requests waiting for a database, you need 5,000 OS threads. Most of them are just sitting idle, but they still occupy memory and require expensive “context switches” by the CPU.

Virtual Threads are different. They are managed by the JVM, not the OS. They are extremely lightweight (kilobytes, not megabytes). When a Virtual Thread hits a blocking operation (like a REST call or a SQL query), the JVM “unmounts” it from the OS thread, allowing other work to happen. When the I/O data returns, the JVM “remounts” the thread and it continues right where it left off.

To the developer, it looks like standard, blocking code:

// This looks like it blocks a thread, but if it's a

// Virtual Thread, the underlying OS thread is freed!

String result = restTemplate.getForObject("http://slow-api.com", String.class);

The “Shift”: How Spring Boot Adapts

Starting with Spring Boot 3.2, you can enable Virtual Threads with a single line of configuration:

spring.threads.virtual.enabled=true

Once enabled, Tomcat (or Jetty) will use a Virtual Thread for every incoming HTTP request. You no longer need to pool your threads or worry about hitting a 200-thread limit.

Benchmarks: Platform vs. Virtual

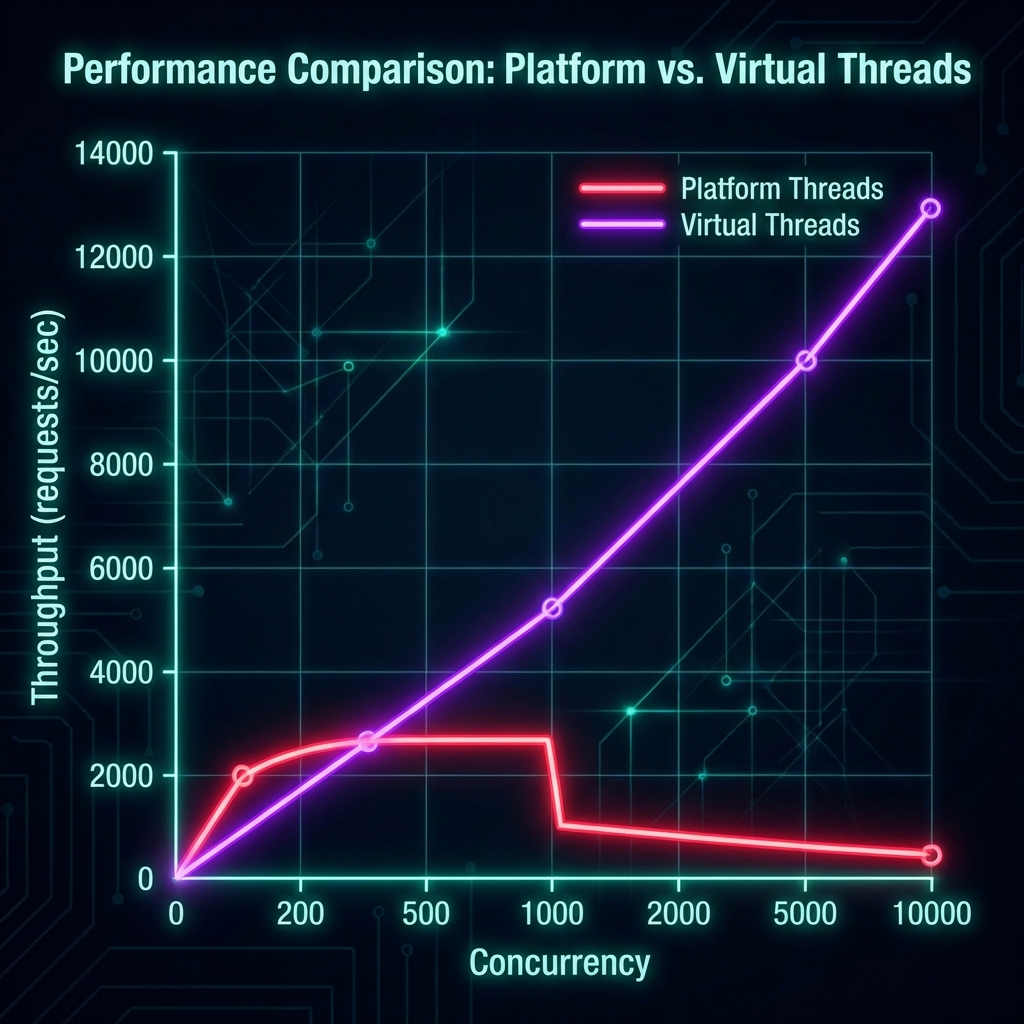

We ran a benchmark simulating a service that calls a slow external API with a 500ms latency. We measured how many requests per second (RPS) the application could handle as concurrency increased.

Key Findings:

- Platform Threads: Flatlined at around 200 concurrent users (the default Tomcat pool size). Latency spiked as requests queued up.

- Virtual Threads: Scaled linearly up to 10,000+ concurrent users. Throughput increased by over 4x on the same hardware without any code changes.

- Memory Footprint: Virtual threads reduced memory overhead per request by nearly 40%, significantly lowering cloud infrastructure costs.

| Metric | Platform Threads | Virtual Threads |

|---|---|---|

| Max Concurrent Req | ~2,500 (Memory Bound) | 1,000,000+ |

| Throughput (RPS) | 400 | 1,600+ |

| Tail Latency (p99) | 4.2s (Queuing) | 0.6s |

The “Gotchas”: When Loom Fails

While Virtual Threads are a “magic bullet” for I/O-bound tasks, they have two major pitfalls you must know:

1. Thread Pinning (Synchronized Blocks)

If a Virtual Thread enters a synchronized block or method and then hits a blocking I/O operation, it becomes pinned to the OS thread. This prevents the JVM from unmounting it, neutralizing the benefits of Loom.

The Fix: Prefer ReentrantLock over synchronized for sections that involve I/O.

2. ThreadLocal Bloat

Because you can have millions of Virtual Threads, any large objects stored in ThreadLocal can quickly eat up your entire heap.

The Fix: Use ScopedValue (introduced in Java 21/22) for modern thread-local data management.

Conclusion: Should You Switch?

If your application is I/O-intensive (microservices talking to each other, DB-heavy apps), moving to Java 21 and enabling Virtual Threads is the single most impactful performance upgrade you can make.

It marks the end of the “Asynchronous Era” for most enterprise applications. You can go back to writing clean, readable, sequential code while enjoying the scalability of a reactive system.

Found this deep-dive helpful? Check out our previous post on High-Throughput File Uploads or our OpenTelemetry series.