GraalVM Native Images: Shrinking the Cloud Bill

The JIT Overhead: A Cloud-Native Problem

In the era of large monoliths, the JIT (Just-In-Time) compiler was a miracle. It observed your code at runtime, identified “hot spots,” and optimized them until they surpassed the speed of C++. However, this miracle comes with a high “Warm-up Tax”: a heavy memory footprint and slow startup times.

In a modern world of Kubernetes, Serverless, and Scale-to-Zero, the JIT model is no longer king. When a pod needs to scale up instantly to handle a spike, waiting 5 seconds for the JVM to boot is unacceptable.

Enter GraalVM Native Image and Spring AOT.

Ahead-of-Time (AOT) Compilation

GraalVM Native Image uses a technique called Static Analysis to look at your application at build time. It discovers every class, method, and field that is actually used and compiles them directly into a standalone Native Executable.

This means:

- No JVM required at runtime.

- No Class Loading at runtime.

- No JIT Compilation at runtime.

The result is an application that is ready to serve traffic the millisecond it starts.

The Spring Boot 3 Engine

Spring Boot 3 introduces the Spring AOT engine, which handles the heavy lifting of reflection, proxies, and dynamic configuration—things that used to be roadblocks for native Java.

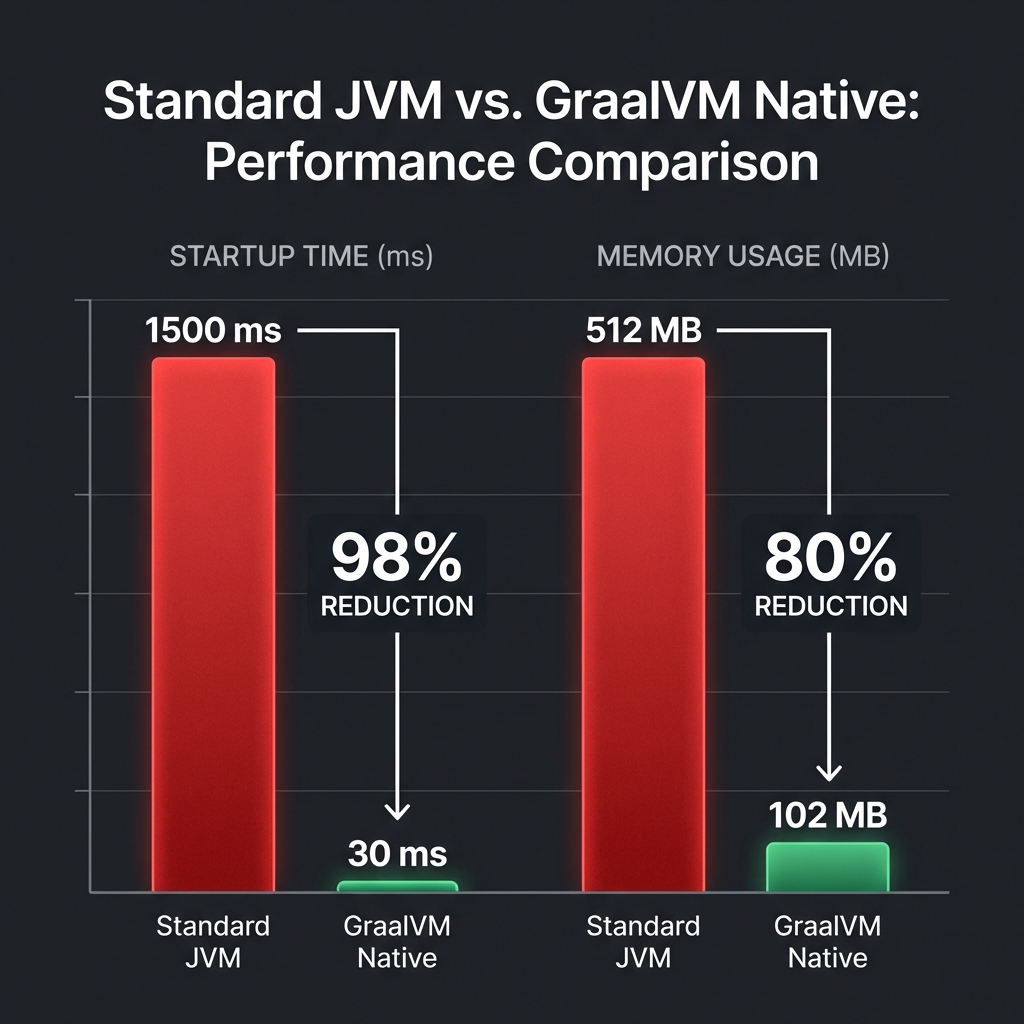

The Numbers Don’t Lie

We compared a standard Spring Boot 3.4 REST API (packaged as a JAR) against a Native Executable built from the same code.

Benchmark Results:

| Metric | Standard JVM (JIT) | GraalVM Native | Improvement |

|---|---|---|---|

| Startup Time | 2,500 ms | 50 ms | 98% Faster |

| Memory (Idle) | 220 MB | 45 MB | 80% Less |

| Executable Size | 65 MB (JAR + JRE) | 38 MB (Standalone) | Smaller Footprint |

For cloud providers like AWS or GCP, this translates directly to cost savings. You can run the same workload on a smaller instance (e.g., t3.nano instead of t3.small) and scale up significantly faster.

When to Go Native (and when to avoid it)

Use Native Image When:

- Serverless (Lambda/Google Cloud Run): Where cold starts are the biggest bottleneck.

- Kubernetes Microservices: For rapid scaling and high density (more pods per node).

- CLI Tools: Where you want the tool to be as fast as a shell command.

Stick with JIT When:

- Peak Throughput is King: JIT’s runtime optimizations often outperform AOT in long-running, CPU-intensive throughput scenarios.

- Complex Reflection: If you rely on many 3rd party libraries that haven’t added AOT triggers yet, you’ll spend a lot of time writing

reachability-metadata.json. - Developer Productivity: Building a native image is slow (take 5-10 minutes). For daily development, keep using the JVM!

Conclusion

GraalVM Native Image is not a replacement for the JVM, but a specialized tool for the cloud-native era. By shifting the complexity from Runtime to Build-time, we can finally run Java with the efficiency of Go or Rust.

If you’re running Spring Boot in a containerized environment, it’s time to start experimenting with the native profile.

Ready to scale? Check out our previous post on Virtual Threads or learn about Streaming File Uploads.