Virtual Threads + Structured Concurrency: The Future of Java Parallelism

Beyond the Basics: The Problem with Unstructured Parallelism

In our previous article on Virtual Threads, we explored how Project Loom revolutionized Java concurrency by making threads cheap. But there’s a hidden danger: unstructured parallelism.

When you manually fork multiple tasks (even with Virtual Threads), you face the “Three Horsemen” of concurrent programming:

- Resource Leaks: Forgetting to wait for a task means it keeps running even if you don’t need it anymore.

- Cancellation Hell: If one task fails, how do you cleanly cancel the others?

- Error Propagation: Aggregating exceptions from multiple concurrent tasks is painful.

Structured Concurrency, introduced as a preview in Java 21, solves all three.

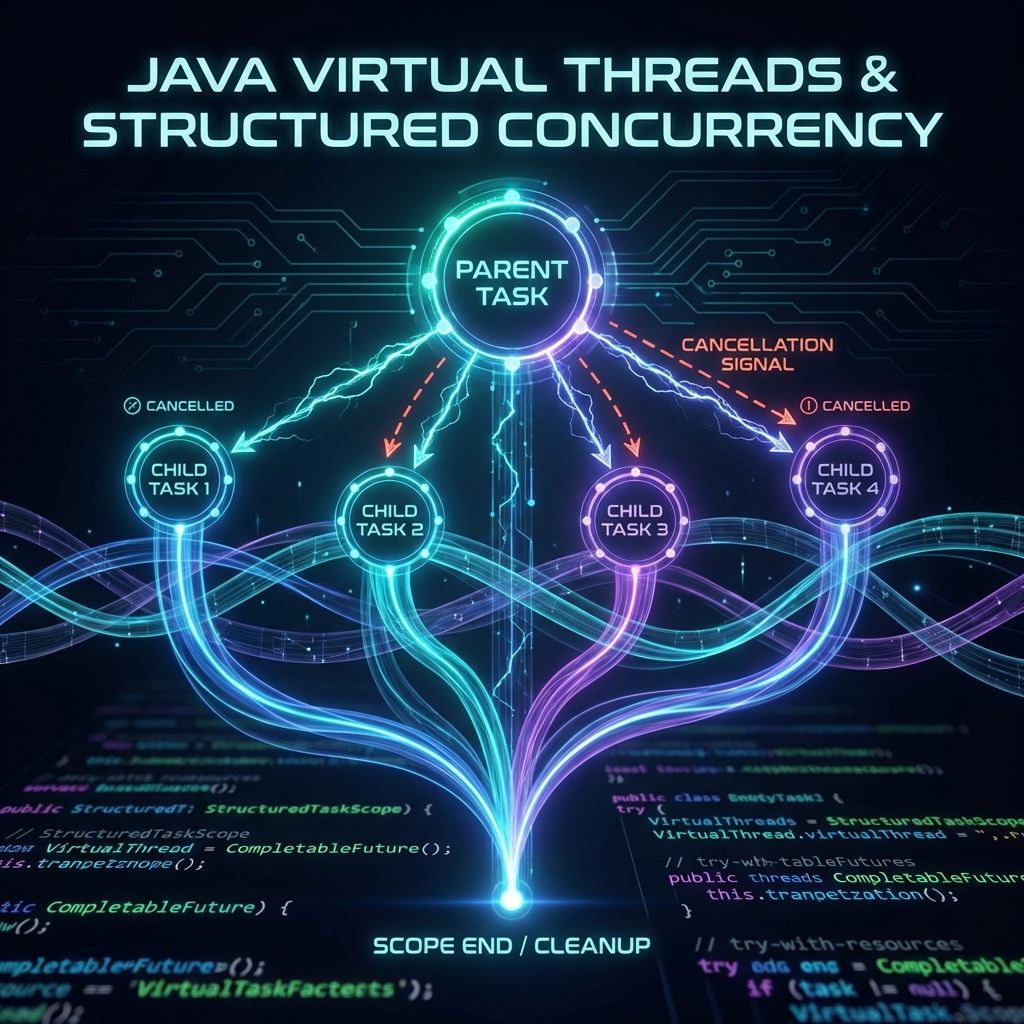

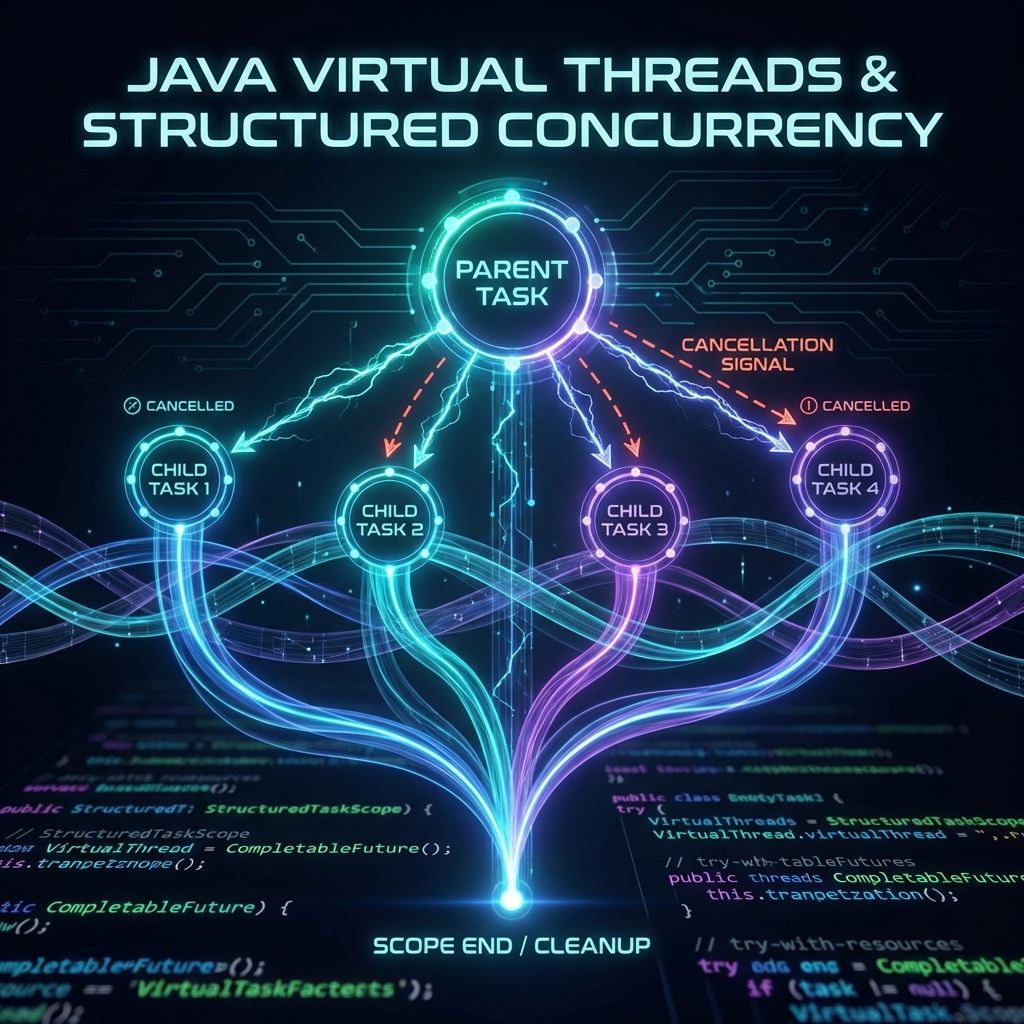

What is Structured Concurrency?

Structured Concurrency treats concurrent tasks like lexical scopes. Just as a variable defined in a block is automatically cleaned up when the block exits, tasks forked within a StructuredTaskScope are automatically managed.

The

Core API: StructuredTaskScope

try (var scope = new StructuredTaskScope.ShutdownOnFailure()) {

var task1 = scope.fork(() -> fetchUser(id));

var task2 = scope.fork(() -> fetchOrders(id));

var task3 = scope.fork(() -> fetchRecommendations(id));

scope.join(); // Wait for all tasks

scope.throwIfFailed(); // Propagate first exception

// All tasks succeeded, aggregate results

return new Dashboard(task1.get(), task2.get(), task3.get());

}

// Scope auto-closes: any tasks still running are cancelled!

Key Benefits:

- Automatic Cancellation: If one task fails, all others are immediately cancelled.

- No Resource Leaks: The

try-with-resourcesensures cleanup even if you forget to wait. - Structured Error Handling: Exceptions bubble up predictably.

Real-World Example: Parallel API Aggregation

Our demo project (examples/java-structured-concurrency/) simulates a user dashboard that fetches data from three microservices:

- User service: 200ms latency

- Orders service: 300ms latency

- Recommendations service: 250ms latency

The Traditional Approach (Sequential):

public DashboardData getDashboard(String id) {

var user = fetchUser(id); // 200ms

var orders = fetchOrders(id); // 300ms

var recommendations = fetchRecommendations(id); // 250ms

return new DashboardData(user, orders, recommendations);

}

// Total: 750ms 🐢

With StructuredTaskScope (Parallel):

try (var scope = new StructuredTaskScope.ShutdownOnFailure()) {

var userTask = scope.fork(() -> fetchUser(id));

var ordersTask = scope.fork(() -> fetchOrders(id));

var recommendationsTask = scope.fork(() -> fetchRecommendations(id));

scope.join();

scope.throwIfFailed();

return new DashboardData(

userTask.get(),

ordersTask.get(),

recommendationsTask.get()

);

}

// Total: 300ms (longest task) 🚀

Result: 60% faster response time with zero risk of resource leaks!

The “Gotchas”

1. Preview Feature (Java 21-23)

Structured Concurrency is still in preview. Enable it with:

javac --enable-preview --release 21 MyClass.java

java --enable-preview MyClass

2. Shutdown Policies

ShutdownOnFailure: Cancels all tasks if any fails (fail-fast).ShutdownOnSuccess: Cancels all tasks once any succeeds (race pattern).

Choose based on your use case!

3. Exception Aggregation

Only the first exception is thrown. If multiple tasks fail, you only get one. For full visibility, inspect scope.exception() manually.

Benchmarks: Structured vs. Traditional

| Scenario | Sequential | Structured Concurrency | Speedup |

|---|---|---|---|

| 3 Parallel APIs (250ms avg) | 750ms | 300ms | 2.5x Faster |

| Error Handling (1 fails) | All complete, then fail | Instant cancellation | Immediate |

| Resource Cleanup | Manual ExecutorService.shutdown() |

Automatic (try-with-resources) | Zero leaks |

Conclusion: The Future is Structured

Structured Concurrency combines the performance of Virtual Threads with the safety of lexical scoping. It’s the missing piece that makes concurrent Java code as simple and reliable as sequential code.

If you’re already using Virtual Threads, upgrading to Structured Concurrency is a no-brainer for any scenario involving parallel subtasks.

Want to dive deeper? Check out our previous post on Virtual Threads or our GraalVM Native Images guide.